This is the reference manual for the quill application, which is a program to help designers of pen-based user interfaces create better gestures. A gesture is a mark made with a pen or stylus that causes the computer to perform an action. For example, if you were editing text, you might use the following gesture to delete a word:

This reference manual describes the features of quill.

This section describes the different types of objects that the user can operate on with quill. In increasing size, they are:

Gestures

Gesture categories

Groups

Sets

Packages

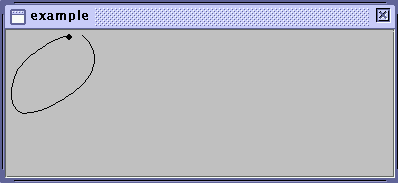

A gesture is a particular mark that invokes a command. For example, the following mark might invoke the "paste" command:

A gesture category is a collection of gestures that tell the recognizer how a type of gesture should be drawn. For example, an application might have gesture categories for "cut", "copy", and "paste" operations. Usually approximately 15 examples are adequate, although sometimes more may be required. A typical application will have a gesture category for each operation that the designer wants to be available using a gesture. However, if two gestures with very different shapes should invoke the same command, the recognition may be better if they are in separate gesture categories than if they are all in the same gesture category. For example, a "square" category includes very small squares and very large squares, it might be recognized better if it were two categories, "big square" and "small square."

A group is used by the designer to organize gestures. For example, one might have a "File" group for gestures dealing with file operations, a "Format" group for formatting gestures, etc. The recognizer ignores groups.

A set is a collection of gesture categories and/or groups. quill uses two different kinds of sets for two different purposes. A training set is a set that is used to train the recognizer. A test set is used to test the recognizability of the training set. For example, you might have a test set whose gestures are very neatly drawn and one whose gestures are sloppily drawn, to see how well neat vs. sloppy gestures are recognized.

There must be a training set for the recognizer to do recognition.

A package holds all the gesture information for one application. (Although applications that have multiple modes may require more than one package.) A package contains a training set and may contain one or more test sets. quill creates one top-level window for each package. All quill data files store exactly one package each (although older legacy gesture files may each store a set or a gesture category).

Before the elements of the interface can be described, some interaction terms need to be defined.

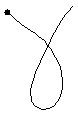

The quill main window is shown below:

Figure:

main window

The remainder of this section describes the various parts of the main window.

This area shows the training set and the groups, and the gesture categories they contain. Individual gestures are not shown here. A line showing a category might look like this:

![]()

Clicking

on the name, folder icon, or gesture icon selects an object (and

deselects everything else in the tree). Clicking on the warning icon

scrolls the log at the bottom of the window so that the relevant

warning is in view. Shift-clicking extends the selection.

Control-clicking toggles the selection of one object. Double-clicking

creates a new window that shows the object.

Selected objects in the tree view may be edited using the Edit menu. The placement of newly created objects (see Gesture menu section) and the behavior of the gesture drawing area are determined by the selection.

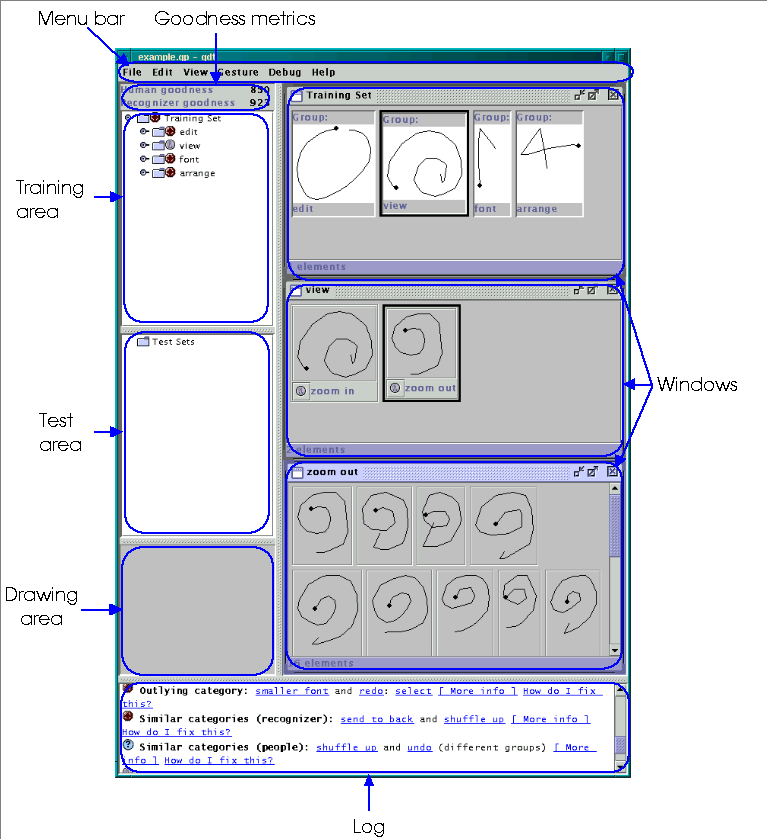

Windows appear in the right part of the main window and are used to browse gesture categories, groups, and sets and to show information about suggestions in the log window (such as misrecognized training examples). Windows can be resized by dragging their title bars up and down. Clicking anywhere in a window will select it. Clicking the close box on the right side of the title bar will close the window.

In windows that display objects, individual objects may be selected using the same mechanisms as in the tree view. That is, clicking on an object selects it (and deselects everything else in the subwindow). Shift-clicking extends the selection. Control-clicking toggles the selection of one object. Double-clicking creates a new view of the object in a new window.

Selected objects in windows may be edited using the Edit menu. The placement of newly created objects (see Gesture menu section) and the behavior of the gesture drawing area are determined by the selection.

The following subsections describe specifics of particular types of windows.

This window shows the gesture categories and groups contained in a gesture set (e.g., the training set or a test set).

This window shows the gesture categories contained in a group.

This window shows the gestures for a gesture category.

This window shows a single gesture.

This window shows gestures that were misrecognized. Each gesture has a label and a button. The green label says which gesture category the gesture is supposed to be. The red button says which gesture category it was recognized as. Clicking on the red button creates a window that shows the gesture category it was recognized as.

This area is used for drawing gestures. If a gesture category is selected in the tree view or if a gesture category window is active, a gesture drawn here will be added to the selected gesture category. If a gesture window is active, a gesture drawn here will replace it. If a gesture group or set is selected in the tree or a gesture group or set window is active, an example drawn here will be recognized. Results of the recognition will be shown in the log, and the label for the recognized gesture will turn green in the training area and in any windows in which it appears.

During certain operations (e.g., training the recognizer), the application is unable to accept drawn gestures, and during this time this area will turn gray.

This view is the primary means for the application to communicate to the user about what it is doing. Many different types of messages may appear here. Some examples are:

Notification of autosave.

Notices about problems that the program has detected with the gesture set.

Recognition results.

Errors.

This section describes the menus and their operations.

Operations:

New package. Create a new package. (Note: each file contains exactly one package.)

Open. Open a package file. If another package is open, this creates a new top-level window.

Save. Save the current package. If the current package does not need to be saved, this is greyed out.

Save As... Save the current package using a different name or in a different directory.

Save all. Save all open packages (that need saving).

Print. Print the active window.

Close. Close the current package. It will prompt the user if the package is unsaved.

Quit. Quit quill. It will prompt the user if any packages are unsaved.

Operations:

Cut. Remove the current selection and put it on the clipboard.

Copy. Copy the current selection to the clipboard.

Paste. Copy the contents of the clipboard into the package.

Delete. Delete the current selection.

Select all. Select everything in the current view.

Undo. Undo the last operation.

Redo. Redo the last undone operation.

Preferences. Bring up a window that allows preferences to be set.

For rules about where in the package pasted objects are placed, see the rules for new objects in Gesture menu.

Operations:

New. Create a new view of the selected object(s).

Suggestions on/off. Enable/disable the display of new suggestions in the log.

Clear suggestions. Clear all suggestions in the log.

Operations:

New category. Create a new gesture category.

New group. Create a new group.

New test set. Create a new test set.

Rename. Rename a gesture category, group, or test set.

Enable. Enable the current selection. All objects are enabled by default. Enabled objects are used to train the recognizer and are analyzed by the program for possible suggestions.

Disable. Disable the current selection. Unselected objects are not used in training the recognizer, and are not analyzed for most types of problems.

Train set / Set already trained. Train the recognizer with the current training set. This is only enabled if the recognzier is not trained or being trained. Any change to the training set makes the recognizer untrained.

Analyze set. Examine the set using the enabled analyzers.

Test recognition... Test the recognition of the training set by trying to recognize the selected test set(s). Problems are reported in the log.

Newly created objects will be placed in the selected object, or in the container object closest to the selected object that can contain the new object. For example, a group cannot be inside of another group, so if gesture A inside group B is selected and the "New Group" operation is performed, the new group will be added after B (i.e., as a sibling of B), not inside of (i.e., as a child of) A or B.

Operations:

Tutorial. Creates a separate window that shows the tutorial.

Reference. Creates a separate window that shows this document.

About quill. Gives brief information about the program.

To help the designer create good gestures, quill periodically analyzes the training set and provides suggestions. There are several different analyzers, which are described below.

One of the analyzers looks for gestures in the training set that are recognized as something other than the gesture category they belong to. Such gestures are usually caused by one of two things:

Spurious gesture. The designer may have lifted the pen too soon and truncated the gesture, accidentally put in a single dot, or made some other mistake which caused a gesture to be in the training set that was not intended. This is easy to fix by simply removing the mistaken gesture and drawing another one.

Gestures that are very similar to the recognizer. Sometimes the designer may create two gesture categories that are very similar to the recognizer. This problem will normally be detected explicitly (see Gesture Categories Too Similar for Recognizer), but may also cause misrecognized gestures. For example, if gesture categories A and B are similar to each other, then some gestures from A may be misrecognized as B and vice versa.

Another analzyer looks at all possible pairs of gesture categories and computes how similar they are to the recognizer. If they are too similar, it will be more difficult for the recognizer to tell them apart, and so more likely to misrecognize gestures. This can be corrected by changing the gesture categories so that they are more different to the recognizer, usually by changing their training gestures to have different values for one or more features. When quill detects that two gesture categories are too similar, it will provide information about how to make them more different.

You rarely want to have multiple gesture categories with the same name. quill often refers to gesture categories by name in its displays and messages, so multiple gesture categories with the same name will be confusing to users and to other designers.

Also, when using test sets, the recognizer uses gesture category names to determine if the recognition is correct or not. If gesture category names are not unique, test gestures which should be marked incorrect may be marked correct.

Another analzyer looks at all possible pairs of gesture categories and computes how similar humans will perceive them to be. Although it is unproved, we think it likely that if dissimilar operations have similar gesture categories, people may confuse them and find it harder to learn and remember them. On the other hand, it is likely fine for similar operations to have similar gesture categories, and may even be beneficial for learning and remembering them.

Normally, it is easier for the recognizer to recognize gesture categories if they are very different from one another. However, sometimes the type of recognizer that quill uses can have difficulty recognizing gesture categories if many gesture categories are similar in some way but one is very different from them (i.e., is an outlier). When this problem occurs, quill will notify you and tell you how to change the gesture category to make it more like the others and improve the recognition.

Sometimes designers misdraw training gestures, especially if they are unused to using a pen interface. quill looks for training gestures that are very different from others for the same gesture category, and notifies the designer of those gestures since they may be misdrawn. If you see a gesture labeled an outlier that is not misdrawn, you should press the "Gesture Ok" button to tell the computer that this outlier is ok.

Designers can take advantage of most of the features of quill without knowing how the gesture recognizer works. However, in some cases it will be useful to know how the recognizer "sees" gestures in order to improve their recognition. In the future, quill may support multiple recognizers, but as of now it only supports the recognizer by Dean Rubine1. This recognizer is described in the following section.

Rubine's recognizer is a feature-based recognizer because it categorizes gestures by measuring different features of the gestures. Features are measurable attributes of gestures, and are often geometric. Examples are length and the distance between the first and the last points. To recognize an unknown gesture, the values of the features are computed for it, and those feature values are compared with the feature values of of the gestures in the training set. The unknown example is recognized as the gesture with features values that are most like the feature values of the unknown gesture.

The following sections describe in more detail how the recognizer works. First, the features are explained. Then the recognizer training process is described. Finally, recognition is described in more detail.

Rubine's recognizer is a feature-based recognizer because it categorizes gestures by measuring different features of the gestures. Features are measurable attributes of gestures, and are often geometric. quill uses the following features:

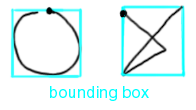

This is not really a feature in itself, but several of the features use it. The bounding box for a gesture is the smallest upright rectangle that encloses the gesture.

This feature is how rightward the gesture goes at the beginning. This feature is highest for a gesture that begins directly to the right, and lowest for one that begins directly to the left. Only the first part of the gesture (the first 3 points) is significant.

This feature is how upward the gesture goes at the beginning. This feature is highest for a gesture that begins directly up, and lowest for one that begins directly downt. Only the first part of the gesture (the first 3 points) is significant.

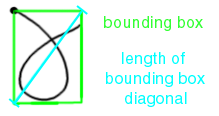

This feature is the length of the bounding box diagonal.

This feature is the angle that the bounding box diagonal makes with the bottom of the bounding box.

This feature is the distance between the first and last points of the gesture.

This feature is the horizontal distance that the end of the gesture is from the start, divided by the distance between the ends. If the end is to the left of the start, this feature is negative.

This feature is the vertical distance that the end of the gesture is from the start, divided by the distance between the ends. If the end is below of the start, this feature is negative

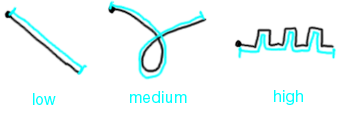

This feature is the total length of the gesture.

This feature is the total amount of counter-clockwise turning. It is negative for clockwise turning.

This feature is the total amount of turning that the gesture does in either direction.

This feature is intuitively how sharp, or pointy, the gesture is. A gesture with many sharp corners will have a high sharpness. A gesture with smooth, gentle curves ill have a low sharpness. A gesture with no turns or corners will have the lowest sharpness.

More precisely, all gestures are composed of many small line segments, even the parts that look curved. This feature measures the angular change between each line segment, squares them, and adds them all together. The angular change is shown here:

For every gesture, the recognizer computes a vector of these features called the feature vector. The feature vector is used in training and recognition as follows.

The recognizer works by first being trained on a gesture set. Then it is able to compare new examples with the training set to determine to which gesture the new example belongs.

During training, for each gesture the recognizer uses the feature vectors of the examples and computes a mean feature vector and covariance matrix (i.e., a table indicating how the features vary together and what their standard deviations are) for the gesture.

When an example to be recognized is entered, its feature vector is computed and is compared to the mean feature vector of all gestures in the gesture set. The candidate example is recognized as being part of the gesture whose mean feature vector is closest to the feature vector of the candidate example.

For a feature-based recognizer to work perfectly, the values of each feature should be normally distributed within a gesture, and between gestures the values of each feature will vary greatly. In practice, this is rarely exactly true, but it is usually close enough for good recognition.

quill tries to predict when people will perceive gestures as very similar. Its prediction is based on geometric features, some of which the recognizer also uses. There are some features that are used for similarity prediction that are not used for recognition. These features are described below.

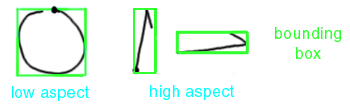

This feature is the extent to which the bounding box differs from a square. A an example with a square has bounding box aspect of zero.

This feature is how curvy, as opposed to straight, the gesture is. Gesture with many curved lines have high curviness while ones composed of stargith lines have low curviness.

A gesture with no curves has zero curviness. There is no upper limit on curviness.

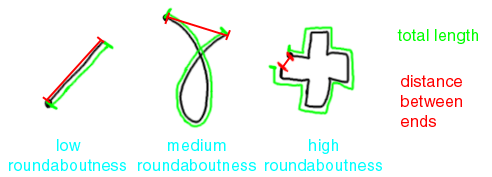

This feature is the length of the gesture divided by its endpoint distance.

The lowest value it can have is 1. There is no upper limit.

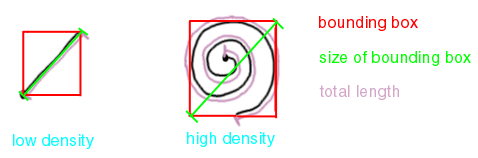

This feature is how intuitively dense the lines in the gesture are. Formally, it is the length divided by the size of the bounding box.

The lowest value it can have is 1. There is no upper limit.

This feature is the logarithm of the area of the bounding box.

This feature is the logarithm of the aspect.

The logarithm is a mathematical function on positive numbers2. The logarithm of a number x is written as "log(x)". For our purposes, its important properties are:

It makes numbers smaller: a < log(a).

It preserves ordering: if a > b, then log(a) > log(b).

The logarithm of 1 is 0. For all numbers less than one, the logarithm is negative. For all numbers greater than one, the logarithm is positive.

The logarithm function looks like this:

1Rubine, D. "Specifying Gestures by Example". Proceedings of SIGGRAPH '91, p. 329-337.

2For this reference, we'll pretend that you cannot take the logarithm of a negative number.